|

|

OpenAI and Anthropic Trade Blows with GPT-5.3 and Opus 4.6

Plus: Harvard finds AI expanding workloads, 100+ experts warn of real-world AI risks, Kling 3.0 pushes video AI forward, and more.

Hello Engineering Leaders and AI Enthusiasts!

This newsletter brings you the latest AI updates in just 4 minutes! Dive in for a quick summary of everything important that happened in AI over the last week.

And a huge shoutout to our amazing readers. We appreciate you😊

In today’s edition:

🏆 OpenAI’s GPT-5.3-Codex tops AI coding charts

🤖 Anthropic’s Claude Opus 4.6 goes multi-agent

🎬 Kling 3.0 brings more length and consistency to AI video

⚠️ AI safety risks move from theory to reality

🧠 Harvard research reveals AI adds more work

💡Knowledge Nugget: Developing AI Taste: Understanding the Positioning Battle in AI by Johnson Shi

Let’s go!

OpenAI’s GPT-5.3-Codex tops AI coding charts

OpenAI just unveiled GPT-5.3-Codex, its new flagship coding model that blends advanced programming skills with stronger reasoning into one faster system. The company says early versions were already used internally to catch bugs in training runs, manage rollout processes, and analyze evaluation results.

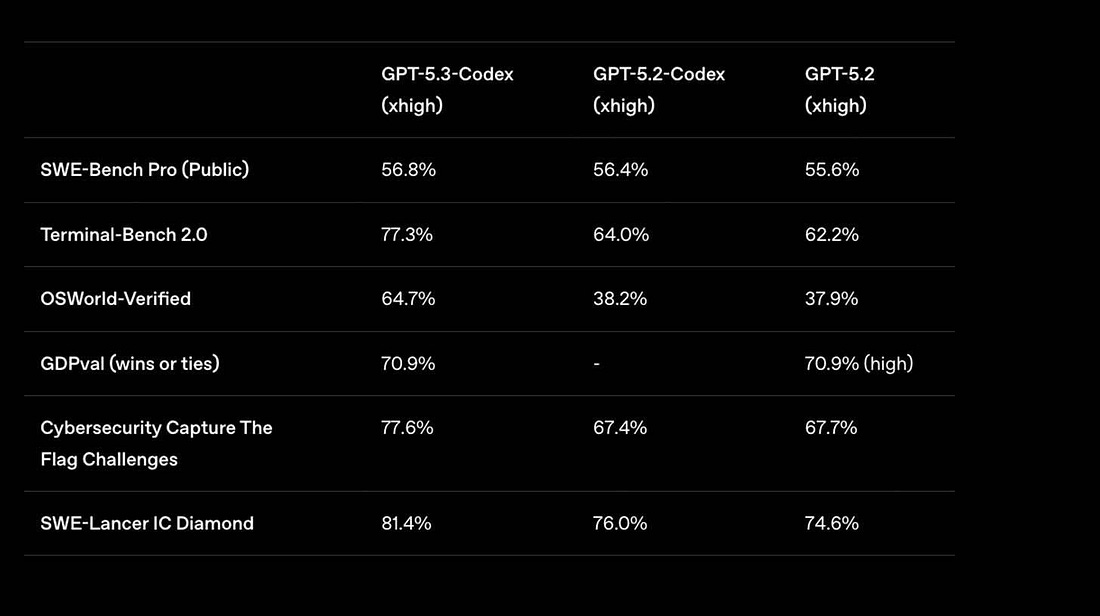

The model now leads major agentic coding benchmarks, beating Opus 4.6 by 12% on Terminal-Bench 2.0. On OSWorld, which measures how well AI can control desktop environments, it scored 64.7%, nearly doubling the prior Codex version. OpenAI also labeled it with its first “High” cybersecurity risk rating and pledged $10M in API credits to fund defensive security research.

Why does it matter?

What once felt like a race for better autocomplete is quickly turning into a battle over autonomous software agents. The coding benchmark wins matter, but the bigger story is AI beginning to operate inside the very systems that build it.

Anthropic’s Claude Opus 4.6 goes multi-agent

Anthropic just released Claude Opus 4.6, its most powerful model yet, and it’s pushing AI deeper into everyday workflows. The headline features “agent teams” in Claude Code, allowing multiple AI agents to split up a project and work on it simultaneously, instead of step by step.

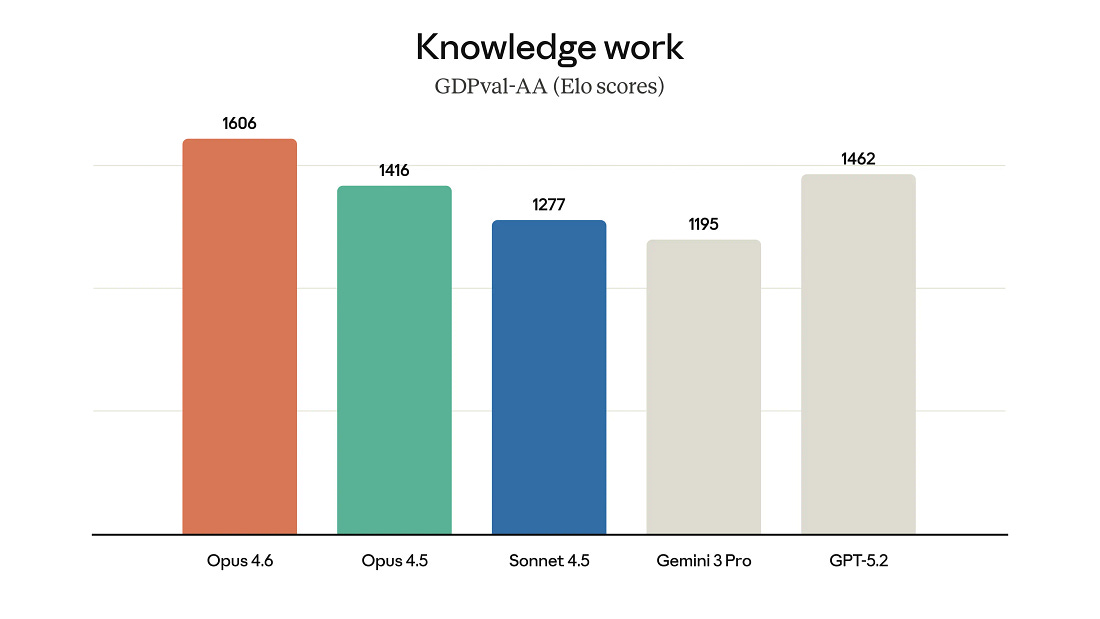

Opus 4.6 also upgrades to a 1M token context window, enabling heavy document and code analysis. New Excel and PowerPoint sidebars let Claude read existing templates and build spreadsheets or decks directly inside Office tools. The model topped most agentic benchmarks, including a sharp jump on ARC-AGI-2.

Why does it matter?

It’s another big moment in the model race, with Opus 4.6 landing just as Codex 5.3 pushes the ceiling higher on coding and agents. Upgrade cycles are tightening, multi-agent workflows are becoming real, and task length keeps stretching. The idea that progress is slowing looks harder to defend with each release.

Kling 3.0 brings more length and consistency to AI video

Chinese AI startup Kling just launched Kling 3.0, merging text-to-video, image-to-video, and native audio into one multimodal model. The system now supports 15-second clips and introduces a new “Multi-Shot” mode that automatically generates different camera angles within a single project.

Creators can lock in characters and scenes across shots using reference “anchors,” while native audio now supports multi-character voice cloning and expanded multilingual dialogue. For now, access is limited to Ultra-tier users, with a broader rollout expected soon.

Why does it matter?

Kling has already been hovering near the top of AI video rankings, and 3.0 looks like another step forward in capability. The move to a unified model with multi-shot control and native audio reflects where the industry is heading, away from isolated clips and toward structured production workflows with built-in consistency and creative control.

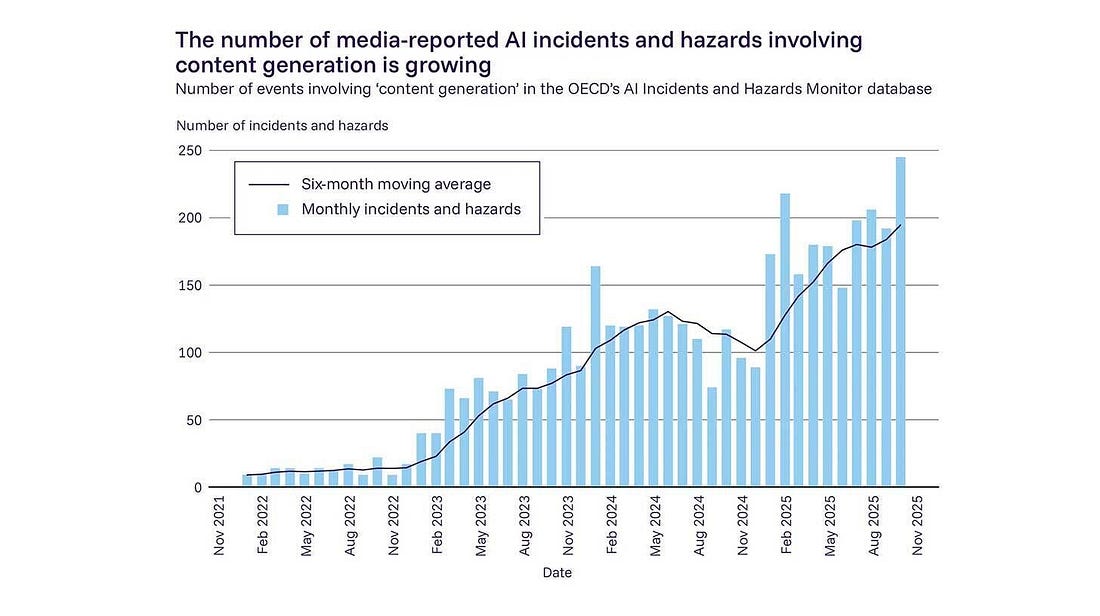

AI safety risks move from theory to reality

More than 100 AI experts, led by Yoshua Bengio, released the second International AI Safety Report, warning that threats once considered hypothetical are now showing up in the real world. The report points to growing evidence of AI being used in cyberattacks, deepfake fraud, manipulation campaigns, and other criminal activity.